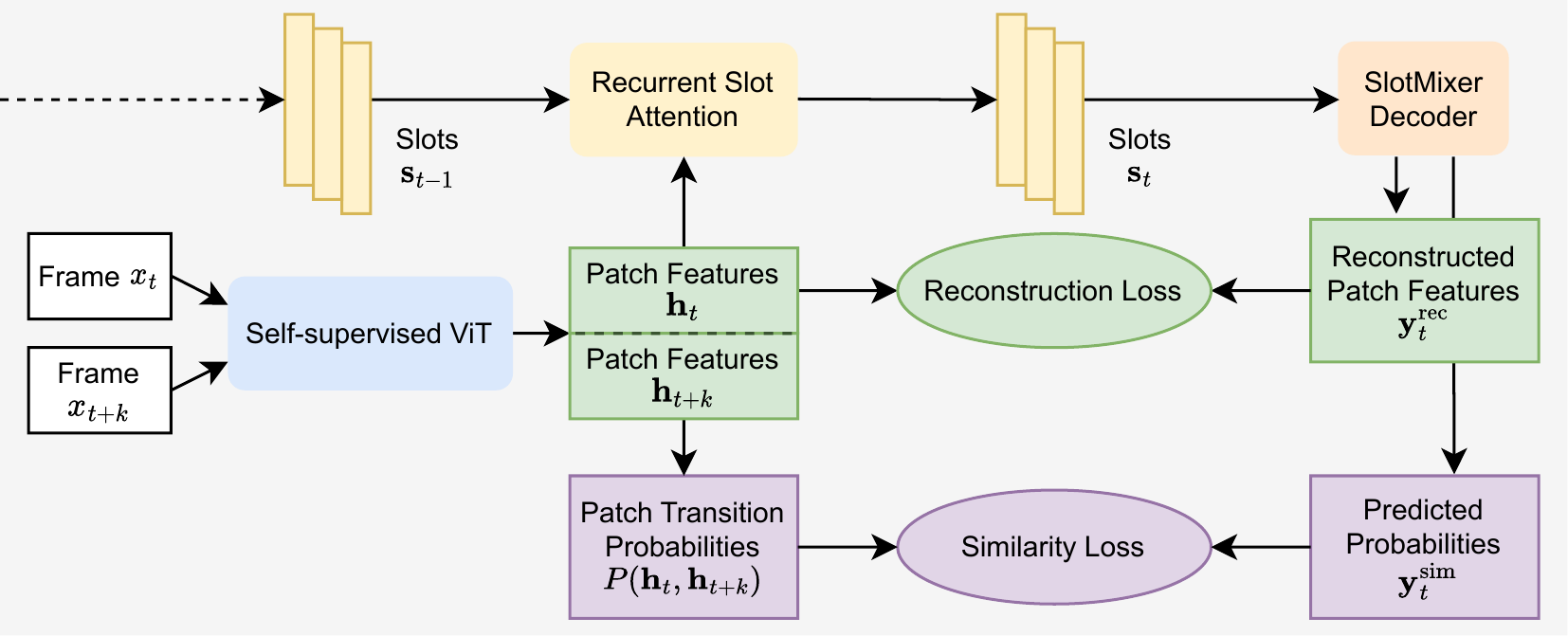

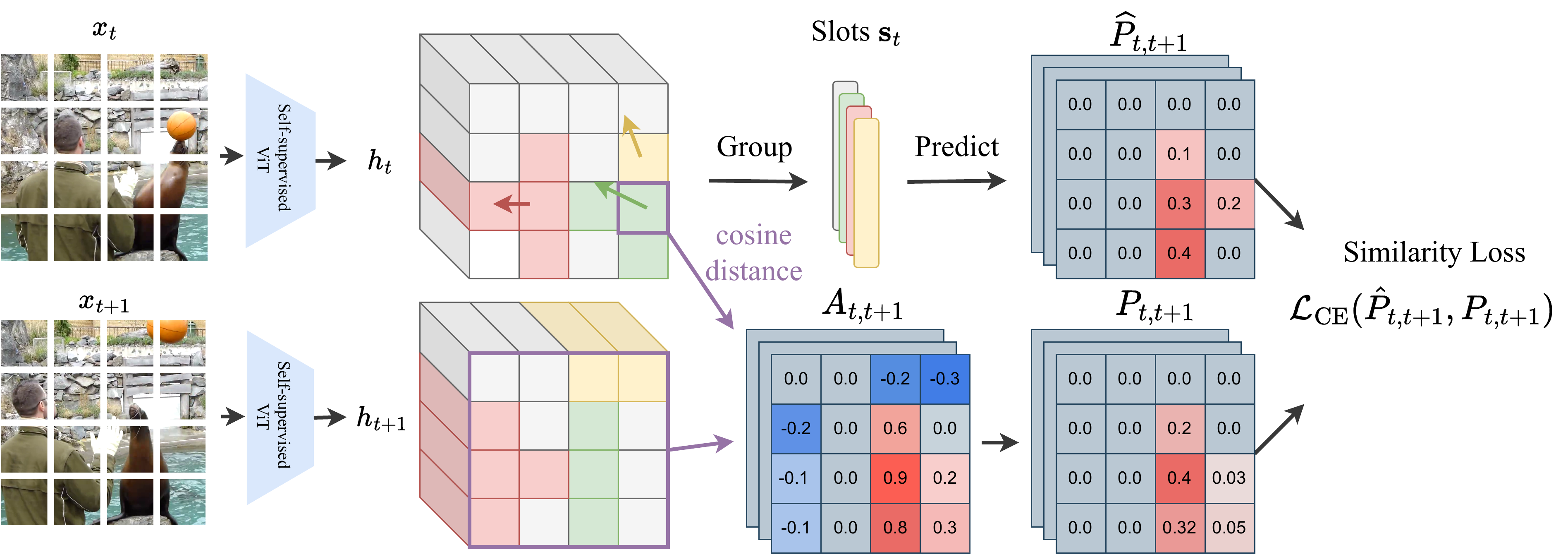

Self-supervised temporal feature similarity loss for training object-centric video models.

The loss incentivizes the model to group areas with consistent motion together.